Like currency flooding into an economy. We’re experiencing what might be called cognitive inflation. The volume of content is exploding, but its actual value is plummeting.

Tell me, this has not happened to you. You downloaded a beautifully formatted report. Stack full of details and charts. Looks professional. Reads smoothly.

And yet…its bland, colorless and absolutely feckin’ useless.

Welcome to workslop, the new currency of cognitive inflation.

We're fooling ourselves that having access to so much more information means we now know so much more. In fact, the opposite is happening.

The Workslop Economy

A recent Harvard Business Review article identified a phenomenon 'workslop'. Employees are using AI tools to create low-effort, passable looking work that ends up creating more work for their coworkers.

Damien Charlotin, Legal expert and commentator talks of AI derived filler appearing in court documents “…in a few cases everyday…”

Consider this: K&L Gates got censured and fined in May of this year. Top international law firm. Serious chops. Judge found their case riddled with AI fabrications. Nine out of 27 citations were bollox. They corrected it. Submitted new documents. Six more AI derived mistakes. This is a firm charging each individual lawyer (in a team of 10) out at north of $2500 an hour.

When someone sends AI-generated content, they're not just using a tool. They’re transferring cognitive burden to recipients who must interpret, verify, correct, or redo the whole thing.

The numbers are sobering. 42% report having received workslop in the last month. Half view colleagues who send it as less trustworthy. Whether its McKinsey or your rivals, I cannot believe you have not come across this sudden flood of reports, white papers and think pieces that reek of…nothing…just soulless words, neatly placed together.

Workslop perfectly encapsulates cognitive inflation. More content. Less meaning. The signal-to-noise ratio is collapsing. Trust in any information becomes impossible without extensive verification, which, frankly nobody appears to have the time for.

Literacy: The Line Between Power and Danger

Recently I read a brilliant article by James Marriott: 'Dawn of the post-literate society’ (link in comments). His research is stark. Reading for pleasure has fallen by 40% in America in the last twenty years. In the UK, more than a third of adults say they've given up reading altogether.

Childrens reading skills have also been declining yearly post-pandemic. Experts link this decline not only to decreased traditional reading but also to reduced critical thinking and comprehension.

The National Literacy Trust reports reading among children is now at its lowest level on record.

It’s not just about books.

But about how we think.

The world of print, Marriott argues, is orderly, logical and rational.

Books make arguments, propose theses, develop ideas. "To engage with the written word," the media theorist Neil Postman wrote, "means to follow a line of thought, which requires considerable powers of classifying, inference-making and reasoning."

"The world after print increasingly resembles the world before print,"

Marriott writes. As our cognitive capabilities diminish, we're creating AI systems in our increasingly confused image. We're building dumbing down of the user into the foundations of our future technologies.

As books die, we are in danger of returning to pre-literate habits of thought. Discourse collapsing into panic, hatred and tribal warfare. The discipline required for complex thinking is eroding.

This is the environment, those on the right are thriving on. They flourish amongst populations with limited capacities for inference-making and reasoning. For more on this see the excellent ‘Segmentation of the far right” from Steven Lacey / The Outsiders -link in comments

I heard a great podcast this week. Geoffrey Hinton, one of the architects of today's AI. In conversation with Steven Bartlett (link in comments)

Hinton warns: We're making ourselves stupider before we understand how to use AI safely. That cognitive decline will be baked into the next generation of AI systems we build.

It's that last bit that's most worrying.

Hinton told investors at a recent conference that instead of forcing AI to submit to humans, we need to build 'maternal instincts' into AI models. Because if we don’t, the temptation amongst bad actors is to do the opposite. Hinton's nightmare scenario isn't just more cyber attacks, though those are crippling institutions worldwide. But weaponised AI creating Covid style viruses.

This is not just about having the wrong tools or using them badly. It's about losing the ability to question the value of what is being produced.

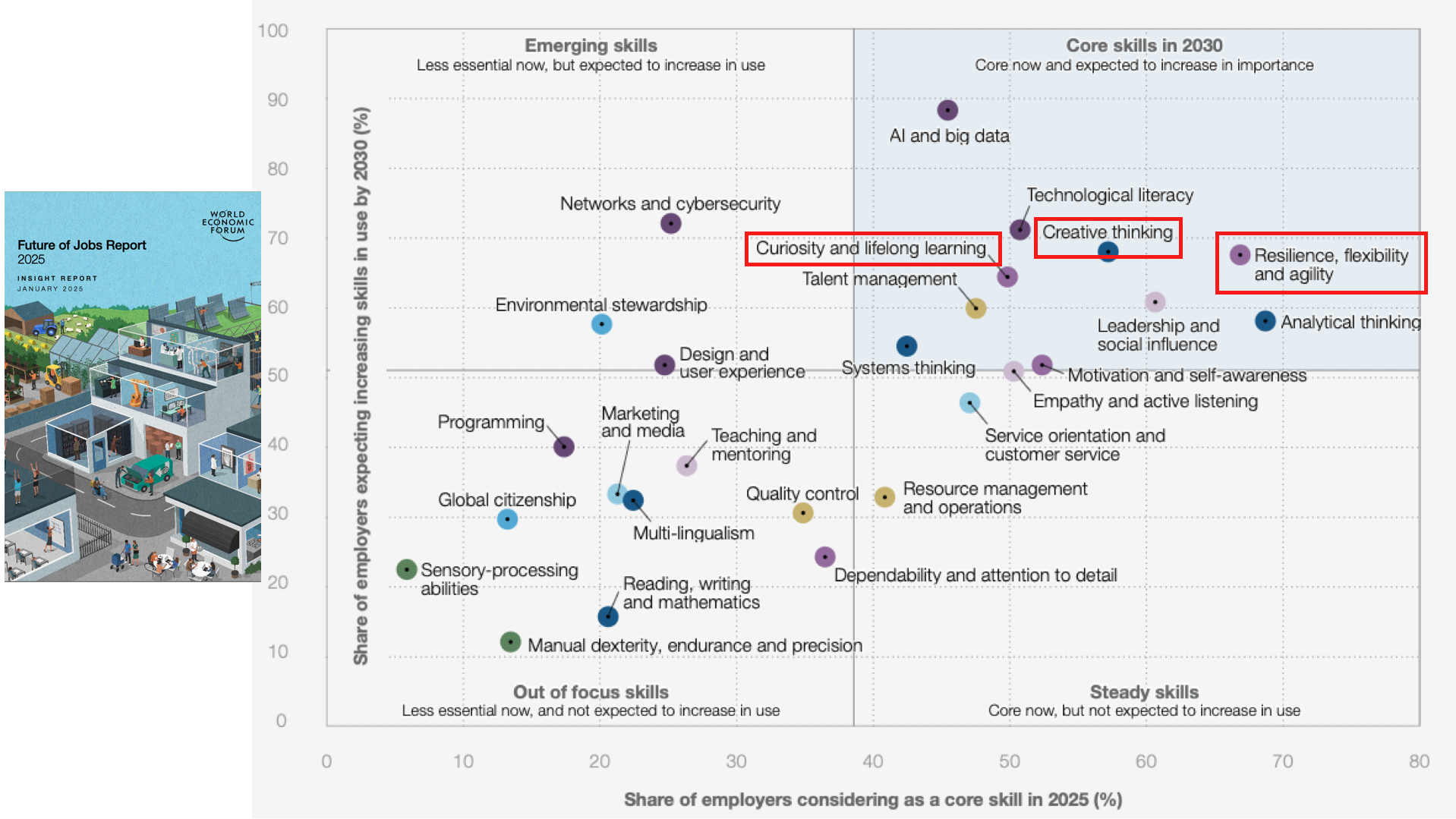

The World Economic Forum identifies curiosity, creative thinking, and flexibility as core skills for future workplace success. It's intriguing how we've arrived at the need to really value basic human traits in our most technologically advanced era of AI.

Competency without curiosity creates professional dead ends. The most valuable professional asset isn't knowing everything. It's maintaining the discipline to approach everything as if you know nothing.

Yet literacy, the foundation of that discipline, is collapsing. Many point at it beginning with smartphones, then heighten during Covid lockdowns, and now supercharged by AI. Although, to that last point. Many amazing educators are harnessing AI to try and desperately reverse this trend.

But Universities are now teaching their first truly 'post-literate' cohorts.

"Most of our students are functionally illiterate,"

according to one despairing University entrance assessment.

Productivity Everywhere, but nowhere

This is cognitive inflation's cruelest joke. We have more tools than ever. More information than ever. More 'productivity' software than ever. Yet productivity just... stalls. (In the UK, we've managed a princely +0.5% annually for the last decade…stunning, you’ll agree.

When efficiency becomes the only goal, the outcome is always the same: a world of increasing activity and decreasing value. We mistake motion for progress. Busyness for productivity. Access for understanding.

Companies are investing billions in AI tools. Most, are not seeing any kind of a measurable return. (Other than their head count has dropped, and they can’t understand why their Glassdoor reviews have done the same) The MIT Media Lab found that 95% of organisations have yet to see measurable return on their investment in AI tools. So much activity, so much enthusiasm, so little return. And yet…

…our capacity to think deeply, to read carefully, to reason logically, erodes. We're not getting smarter. We're just getting louder.

What Comes Next

Without intervention, we're heading toward a workplace where nobody trusts anyone else's work, where verification becomes impossible, where cognitive capacity wastes away like an unused muscle. We become like medieval peasants, but with better WiFi.

‘Pilots’ or ‘Passengers’ is a great analogy for how workers are currently using AI. Pilots are navigating their own course, with AI as an instrument of work. Passengers are, well, sat back letting AI take them where they need to be. (See more at: BatterUp Labs/Stanford University)

Zoe Scaman, has just written a brilliant article about helping major organizations roll out AI strategy, seeing passenger behaviour everywhere (Read ‘The Great Erosion’ link in comments) She talks of people outsourcing the twenty percent of work that's genuinely hard thinking, keeping the eighty percent that's just formatting and execution. Backwards. Catastrophic. Because the twenty percent is where you build the muscles. That's not theory. That's happening in organizations today. The cognitive damage is immediate. The supposed productivity gains? Years away, if they arrive at all.

If you're a leader, your most important job isn't to buy more AI tools. It's to build in space and time where your team strengthens the thinking muscles that AI is surreptitiously stealing

Most importantly, we all need to recommit to the hard work of thinking.

Yes, that also includes reading.

And the discipline of following an argument, weighing evidence, changing our minds when presented with better information. (Again, see rise of the far right in this context)

This challenge isn't technical. It's rational. The question isn't whether we can build better AI. It's whether we can remain capable of using it wisely.

In an age of intellectual inflation, attention is the scarcest resource. Not information.

It’s the ability to actually focus, to think deeply, to spot signal in all the noise.

Our futures belong not to those with access to the most information, but to those who retain the ability to think about it clearly.

We've built an economy where information is infinite yet attention is seemingly worthless.

Where everyone has an opinion but nobody has time to think.

Where our tools grow exponentially more powerful while our minds grow…well, let's be honest, weaker.

This. Can. Only. End. Badly.

The answer depends on whether we're willing to do the one thing our AI-saturated culture makes most difficult: slow down, focus, and think.

We all need to (metaphorically) get up, go for a walk and explore the ideas in our own heads.

We need to celebrate the illogical, lateral, properly weird thinking that only humans do well. The very thing AI can't replicate and, worse, is teaching us to devalue.

Because the only way out is through the hard work of becoming human again.

Conceived and written by a dyslexic human, Me. Made readable by Claude.ai

This whole article coundn’t have been possible without the amazing inspiration of the following writers:

‘AI-Generated “Workshop” is Destroying Productivity

‘The dawn of the post-literate society’

Geoffrey Hinton in conversation with Steven Bartlett

‘Segmentation of the Far Right’

‘Pilots & Passengers: The next Evolution in management’

Zoe Scaman ‘The Great Erosion’